A major aspect of NetworkManager is that it provides a D-Bus API for other applications. When programming such client applications it is therefore helpful to understand D-Bus.

It took me a long time of try and error to get an understanding of how D-Bus works. I hope to share some of the things I learned and give my perspective about D-Bus.

Useful links

Much is already written. Some useful links here.

- www.freedesktop.org:D-Bus: Official Page for D-Bus. See further links in the documentation section.

- wikipedia.org:D-Bus: D-Bus on Wikipedia.

- www.freedesktop.org:Introduction: Introduction to D-Bus.

- dbus.freedesktop.org:Specification: The D-Bus specification.

More:

- smcv.pseudorandom.co:nonblocking: Why you might want to avoid (pseudo) blocking calls.

- github.com:dbus-broker: Repository to an alternative, Linux-only

dbus-daemonimplementation. - 0pointer.net:sd-bus: An introduction to systemd’s sd-bus library, which also introduces D-Bus.

What is D-Bus and how to Understand it?

D-Bus allows processes to communicate. The official page gives a good introduction here. The introduction is also a good read.

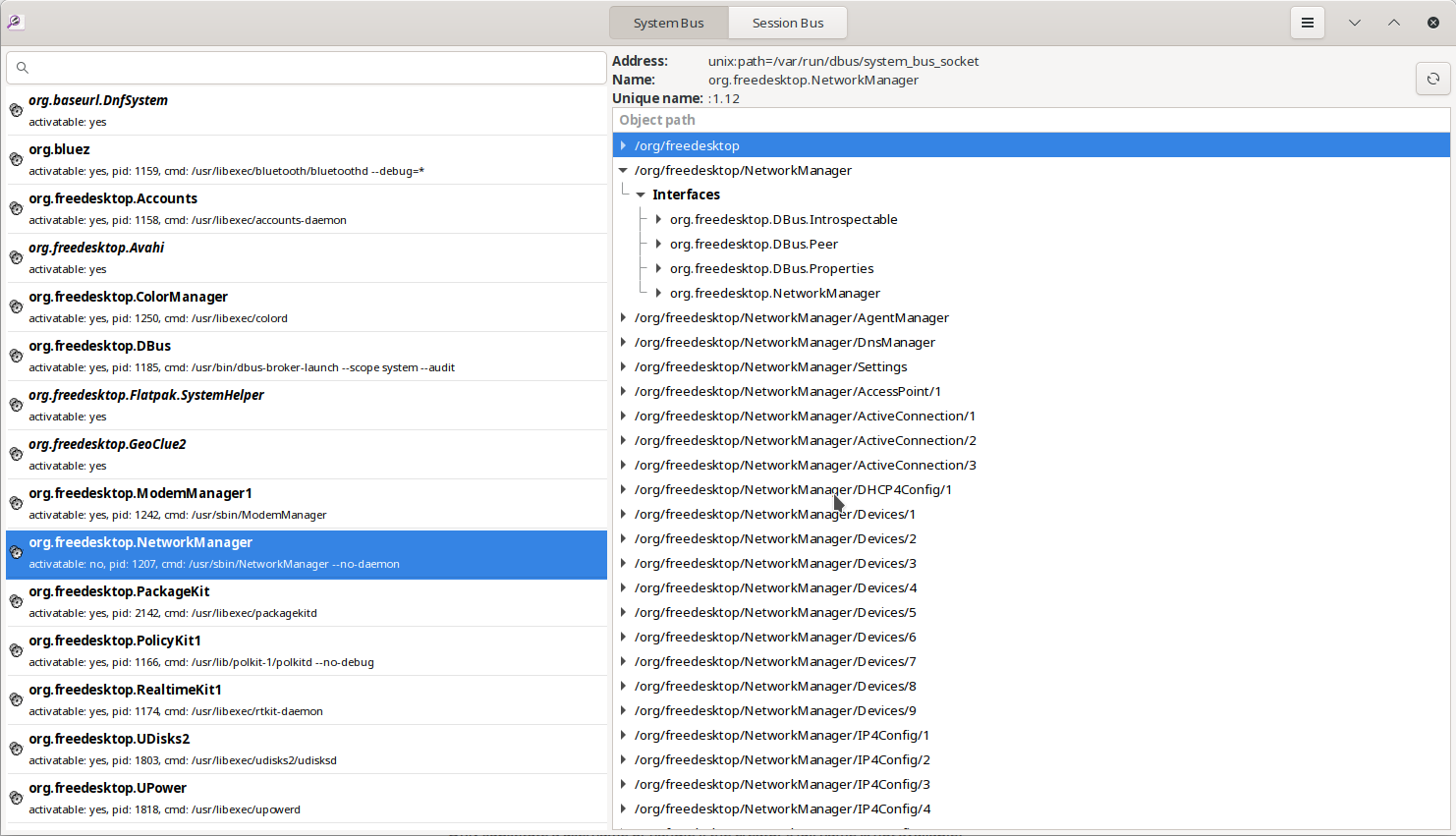

To understand D-Bus I find it most useful just to look at it. A helpful GUI application is called d-feet.

Install d-feet from your distribution. When you start it, you can select the system or the session bus. You will see the bus names, and by clicking on them you can drill down to the objects, their interfaces, and members. You see signals, methods, properties and their type signatures. This is useful to get an initial grasp and to correlate it to the description from the introduction link.

There are also command line tools like glib’s gdbus, Qt’s qdbus and systemd’s busctl. They conveniently

support tab completion and allow to introspect the bus and invoke method calls from the terminal.

# Call GetManagedObjects on NetworkManager using busctl.

busctl --json=pretty \

call \

org.freedesktop.NetworkManager \

/org/freedesktop \

org.freedesktop.DBus.ObjectManager \

GetManagedObjects

The Bus itself

While parts of D-Bus would also allow for direct peer to peer communication, the common way of operation involves a message broker daemon. There are two implementations, dbus-daemon and the Linux-only dbus-broker.

You can have any number of buses, but commonly there is the system bus (where there are services like NetworkManager or systemd) and the session bus (for the user applications of the desktop environment).

With D-Bus, you send messages to the broker daemon, which then forwards them to other peers according to the message’s destination. That means, there is always an additional context switch involved and currently there is no solution for zero copy transfer.

The broker itself is always reachable at a well known bus name org.freedesktop.DBus.

At this location various methods

are available that are useful for information and actions about the bus. For example,

you can register well known bus names (RegisterName) or ask information about a

peer (GetConnectionUnixUser).

Properties and Standard D-Bus Interfaces

D-Bus presents an API that has an object oriented feel. This means you’ll see the terms “objects”, “interfaces”, “methods”, “signals” and “properties”. These terms have a D-Bus specific meaning, but they resemble to what you’d expect when coming from an object oriented programming language like Java.

It’s often useful to think of D-Bus properties as something special, in the

way how D-Bus presents them (look at them with d-feet). However, it’s also useful

to realize that they are merely implemented in terms of methods and signals.

They boil down to Get(), GetAll(), Set() methods and the

PropertiesChanged signal on the standard

org.freedesktop.DBus.Properties

interface.

The org.freedesktop.DBus.Properties interface is one of a few standard D-Bus interfaces

that an object can implement to follow common patterns on the D-Bus API. Another one is the

org.freedesktop.DBus.ObjectManager

which allows to retrieve all objects and properties in one call. NetworkManager

implements both the Properties and ObjectManager interface.

Everything is a Message

D-Bus works by sending messages on a socket. This applies to 1:1 request-reply method calls and 1:n publish-subscribe signals.

I think this is an important point to realize. Whatever you do, you are just

-

sending a method call message to another peer on the bus.

-

wait for a response to a method call. D-Bus and the D-Bus libraries will guarantee you that every method call will receive one reply (or fail with a timeout, cancellation or other error).

-

receiving a signal notification message, after previously having subscribed for it.

It’s an important feature that the order of messages is preserved, at least for messages between the two same peers. The only exception is that a response to a method call might overtake a response to an earlier call when the callee side chooses to answer the latter request first.

Messages are Asynchronous

Sending messages and waiting for reply is fundamentally asynchronous. D-Bus libraries and proxy objects sometimes make it look like synchronous, blocking calls. But by doing so, they wait for a method reply while queueing all other messages in the meantime. This messes up the order of messages and is therefore problematic for non-trivial uses. Simon McVittie explains this “pseudo” blocking well in [smcv.pseudorandom.co:nonblocking].

In my opinion, blocking API is problematic in general.

Asynchronous API can be composed either in parallel or sequentially.

On the other hand, having blocking API at a low layer, fundamentally limits

how it can be used. Blocking API can only be composed sequentially and

cannot do anything else in the meantime – unless you use multiple threads,

which brings a whole other level of problems. For example, POSIX’s getaddrinfo()

is notoriously problematic for being synchronous.

Interprocess communication (IPC) and D-Bus is the lower layer of an application. When building non-trivial applications on top of it, it is often better to compose more powerful asynchronous API. You can always wait for an asynchronous call to complete and thus block at the highest layer of the application.

No Client Library Wrapping D-Bus

Many projects provide a client library that wraps the D-Bus API. Often such a library should not exist. D-Bus is already a reasonably convenient API to use directly, so all you need is a D-Bus client library and the D-Bus API specification of the service you’d like to use. No need for a wrapper around D-Bus.

I say this, when NetworkManager provides such a library with libnm. For libnm,

the justification is that NetworkManager’s API is large and for many

uses a lot of data needs to be fetched from the D-Bus API. Maybe this is an

area where NetworkManager’s D-Bus API should improve to make common operations

easier accessible through fewer D-Bus calls. Anyway, libnm provides a client-side

cache of the D-Bus API with NMClient,

which is useful.

Depend on D-Bus

Sometimes people perceive applications that utilize D-Bus as heavyweight or bloated. This is a myth proven wrong by its use in most crucial parts of a modern Linux system beginning with the first user process, the systemd service manager itself. Services and applications that would choose to use plain UNIX domain sockets instead would either slightly reduce their footprint by omitting features like introspection or security policing via polkit or would have to re-implement them. Re-implementing would, of course, end up increasing the footprint.

Or maybe people object D-Bus because it has this additional dependency of a broker daemon and a client library. Note that the Linux kernel often gets criticised for being monolithic. Here we have useful functionality moved to a user space daemon and a library. This is mostly a good thing (aside the runtime overhead and current lack for zero copy transfers).

D-Bus is really just an IPC mechanism. It may not be perfect, but then again what is? It is adequate, as shown by its wide use. In practice it works so well, that the remaining issues have a low priority for getting fixed and worked on. This is the difference between existing, working software and theoretical, “optimal” solutions.

That is also why alternatives like varlink, bus1 or kdbus find it hard to compete or prevail. All this while D-Bus still saw improvements over the last years with glib’s GDBus, addition of systemd’s sd-bus or dbus-broker as an alternative to dbus-daemon.

D-Bus is most relevant for system applications or a desktop environment, whenever a distribution ships a set of software that needs to interoperate while responsibilities, capabilities and permissions are split to different processes and projects. But the biggest advantage of D-Bus may be of social nature: that it is ubiquitous on the freedesktop landscape.

For NetworkManager, D-Bus is the way. For example, there is a goal that NetworkManager runs in initrd, where historically there was no D-Bus daemon running. There is currently a mode where NetworkManager runs in initrd without D-Bus, but that means NetworkManager is either unable to communicate with various other software (no ModemManager, no wpa_supplicant, no bluez) or has to implement another form of IPC (unix sockets to talk to teamd). Implementing a second solution because the first one is lacking, usually leads to having two lacking solutions. So we rather get the dependency of D-Bus into initrd.

Trust in D-Bus

NetworkManager does not only depend on D-Bus. It trusts that it works.

In general, software should not assume that other software or components work correctly. This can

make software more reliable, in face of partial errors on the system. But there is

a base of non-negotiable requirements. We trust kernel, libc, and glib to do what

they are documented and expected to do. For example, this doesn’t mean that open() syscall

cannot fail with ENOENT. Usually we need to handle if a file unexpectedly doesn’t

exist. However, depending on open() means that if kernel and libc gives us a

positive integer, we rely that this is in fact the file descriptor we expect.

NetworkManager also relies on being able to allocate memory, on netlink sockets or to open

certain file descriptors. A sure way to crash NetworkManager is by limiting RLIMIT_NOFILE

or the available memory, because NetworkManager does not expect or handle to be unable

to perform such basic operations.

In this sense, we trust D-Bus to be functional and work as documented. What is helpful, is that D-Bus provides a lot of guarantees that we gladly rely on. A simple example is that other D-Bus peers are identified by a unique bus name, that parameters to methods are valid according their type signatures or that method calls are guaranteed to get some sort of reply. Relying on such guarantees immensely simplifies the implementation of D-Bus applications.

Another example is when you restart the broker daemon, then NetworkManager and much other software don’t handle that. You will also have to restart those services, which can quickly amount to a reboot. The solution for the restart use-case is not that every software carries the complexity for supporting it. But rather, that the daemon would support restart without disrupting its bus. But apparently this use-case isn’t important enough, maybe because the daemon can reload certain configuration changes. The currently practical solution is thus to avoid restarting the daemon or fallback to reboot.

Avoiding Proxies

Various D-Bus client libraries expose a proxy-like API that feels like like a local object. I don’t like that very much. For one, it hides the simple underlying nature of just sending messages. Also, it can encourage the use of blocking API, something that should be avoided altogether.

Depending on details, D-Bus proxies cache object properties and state like the unique bus name of the peer. Most of D-Bus (sending messages back and forth) has little state associated and is easy to understand. Caching properties means that an additional state is kept, which gets updated at non obvious times. The worst is that often this state is not the sole truth on the client side. Instead, the application gets the property values from the proxy and stores them in another application specific context. The result is that state gets duplicated on the client side.

In terms of glib’s GDBus, GDBusConnection is basically all you need. It allows to make

method calls and subscribe to signals. GDBusProxy and GDBusObjectManager is a lot of complexity

on top, which in my opinion is often not helpful. In NetworkManager, we dropped most uses

of GDBusProxy and gdbus-codegen in favor of plain GDBusConnection (for example

here

or here).

Conclusion

D-Bus is the popular IPC mechanism on the freedesktop software stack. It allows independent system services to communicate, and provide services to each other and to user applications, like a GUI.

This popularity and ubiquity of D-Bus is well deserved. It simplifies the implementation of applications that are loosely coupled, but still need to integrate with each other. It is therefore very suited and popular on the desktop, but not only. Also headless systems have a need for system services that need to talk to each other.

D-Bus is thus the IPC of choice for NetworkManager.